Restricted Data

The Nuclear Secrecy Blog

Posts Tagged ‘Nuclear testing’

Meditations

Liminal 1946: A Year in Flux

Friday, November 8th, 2013There are lots of important and exciting years that people like to talk about when it comes to the history of nuclear weapons. 1945 obviously gets pride of place, being the year of the first nuclear explosion ever (Trinity), the first and only uses of the weapons in war (Hiroshima and Nagasaki), and the end of World War II (and thus the beginning of the postwar world). 1962 gets brought up because of the Cuban Missile Crisis. 1983 has been making a resurgence in our nuclear consciousness, thanks to lots of renewed interest in the Able-Archer war scare. All of these dates are, of course, super important.

But one of my favorite historical years is 1946. It’s easy to overlook — while there are some important individual events that happen, none of them are as cataclysmic as some of the events of the aforementioned years, or even some of the other important big years. But, as I was reminded last week while going through some of the papers of David Lilienthal and Bernard Baruch that were in the Princeton University archives, 1946 was something special in and of itself. It is not the big events that define 1946, but the fact that it was a liminal year, a transition period between two orders. For policymakers in the United States, 1946 was when the question of “what will the country’s attitude towards the bomb be?” was still completely up for grabs, but over the course of the year, things became more set in stone.

1946 was a brief period when anything seemed possible. When nothing had yet calcified. The postwar situation was still fluid, and the American approach towards the bomb still unclear.

Part of the reason for this is because things went a little off the rails in 1945. The bombs were dropped, the war had ended, people were pretty happy about all of that. General Groves et al. assumed that Congress would basically take their recommendations for how the bomb should be regarded in the postwar (by passing the May-Johnson Bill, which military lawyers, with help from Vannevar Bush and James Conant, drafted in the final weeks of World War II). At first, it looked like this was going to happen — after all, didn’t Groves “succeed” during the war? But in the waning months of 1945, this consensus rapidly deteriorated. The atomic scientists on the Manhattan Project who had been dissatisfied with the Army turned out to make a formidable lobby, and they found allies amongst a number of Senators. Most important of these was first-term Senator Brien McMahon, who quickly saw an opportunity to jump into the limelight by making atomic energy his issue. By the end of the year, not only did Congressional support fall flat for the Army’s Bill, but even Truman had withdrawn support for it. In its place, McMahon suggested a bill that looked like something the scientists would have written — a much freer, less secret, civilian-run plan for atomic energy.

So what happened in 1946? Let’s just jot off a few of the big things I have in mind.

January: The United Nations meets for the first time. Kind of a big deal. The UN Atomic Energy Commission is created to sort out questions about the future of nuclear technology on a global scale. Hearings on the McMahon Bill continue in Congress through February.

February: The first Soviet atomic spy ring is made public when General Groves leaks information about Igor Gouzenko to the press. Groves wasn’t himself too concerned about it — it was only a Canadian spy ring, and Groves had compartmentalized the Canadians out of anything he considered really important — but it served the nice purpose of dashing the anti-secrecy lobby onto the rocks.

February: The first Soviet atomic spy ring is made public when General Groves leaks information about Igor Gouzenko to the press. Groves wasn’t himself too concerned about it — it was only a Canadian spy ring, and Groves had compartmentalized the Canadians out of anything he considered really important — but it served the nice purpose of dashing the anti-secrecy lobby onto the rocks.

Also in February, George F. Kennan sends his famous “Long Telegram” from Moscow, arguing that the Soviet Union sees itself in essential, permanent conflict with the West and is not likely to liberalize anytime soon. Kennan argues that containment of the USSR through “strong resistance” is the only viable course for the United States.

March: The Manhattan Engineer District’s Declassification Organization starts full operation. Groves had asked the top Manhattan Project scientists to come up with the first declassification rules in November 1945, when he realized that Congress wasn’t going to be passing legislation as soon as he expected. They came up with the first declassification procedures and the first declassification guides, inaugurating the first systematic approach to deciding what was secret and what was not.

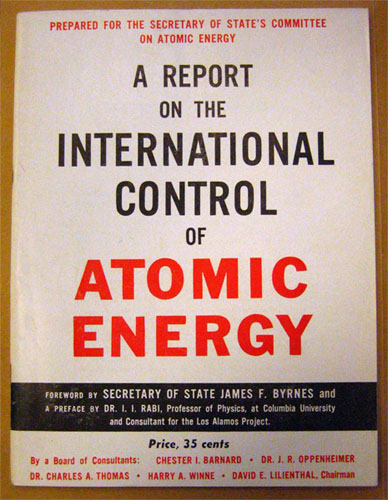

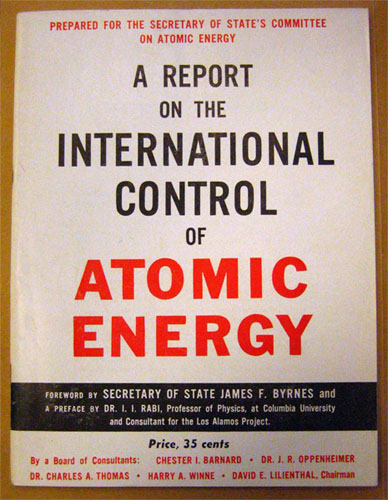

March: The Acheson-Lilienthal Report is completed and submitted, in secret, to the State Department. It is quickly leaked and then was followed up by a legitimate publication by the State Department. Created by a sub-committee of advisors, headed by TVA Chairman David Lilienthal and with technical advice provided by J. Robert Oppenheimer, the Acheson-Lilienthal Report argued that the only way to a safe world was through “international control” of atomic energy. The scheme they propose is that the United Nations create an organization (the Atomic Development Authority) that would be granted full control over world uranium stocks and would have the ability to inspect all facilities that used uranium in significant quantities. Peaceful applications of atomic energy would be permitted, but making nuclear weapons would not be. If one thought of it as the Nuclear Non-Proliferation Treaty, except without any authorized possession of nuclear weapons, one would not be too far off the mark. Of note is that it is an approach to controlling the bomb that is explicitly not about secrecy, but about physical control of materials. It is not loved by Truman and his more hawkish advisors (e.g. Secretary of State Byrnes), but because of its leak and subsequent publication under State Department header, it is understood to be “the” position of the United States government on the issue.

March: The Acheson-Lilienthal Report is completed and submitted, in secret, to the State Department. It is quickly leaked and then was followed up by a legitimate publication by the State Department. Created by a sub-committee of advisors, headed by TVA Chairman David Lilienthal and with technical advice provided by J. Robert Oppenheimer, the Acheson-Lilienthal Report argued that the only way to a safe world was through “international control” of atomic energy. The scheme they propose is that the United Nations create an organization (the Atomic Development Authority) that would be granted full control over world uranium stocks and would have the ability to inspect all facilities that used uranium in significant quantities. Peaceful applications of atomic energy would be permitted, but making nuclear weapons would not be. If one thought of it as the Nuclear Non-Proliferation Treaty, except without any authorized possession of nuclear weapons, one would not be too far off the mark. Of note is that it is an approach to controlling the bomb that is explicitly not about secrecy, but about physical control of materials. It is not loved by Truman and his more hawkish advisors (e.g. Secretary of State Byrnes), but because of its leak and subsequent publication under State Department header, it is understood to be “the” position of the United States government on the issue.

April: The McMahon Act gets substantial modifications while in committee, including the creation of a Military Liaison Committee (giving the military an official position in the running of the Atomic Energy Commission) and the introduction of a draconian secrecy provision (the “restricted data” concept that this blog takes its name from).

June: The Senate passes the McMahon Act. The House starts to debate it. Several changes are made to the House version of the bill — notably all employees with access to “restricted data” must now be investigated by the FBI and the penalty for misuse or espionage of “restricted data” is increased to death or life imprisonment. Both of these features were kept in the final version submitted to the President for signature in July.

June: Bernard Baruch, Truman’s appointee to head the US delegation of the UN Atomic Energy Commission, presents a modified form of the Acheson-Lilienthal Report to the UNAEC, dubbed the Baruch Plan. Some of the modifications are substantial, and are deeply resented by people like Oppenheimer who see them as torpedoing the plan. The Baruch Plan, for example, considered the question of what to do about violations of the agreement something that needed to be hashed out explicitly and well in advance. It also argued that the United States would not destroy its (still tiny) nuclear stockpile until the Soviet Union had proven it was not trying to build a bomb of their own. It was explicit about the need for full inspections of the USSR — a difficulty in an explicitly closed society — and stripped the UN Security Council of veto power when it came to enforcing violations of the treaty. The Soviets were, perhaps unsurprisingly, resistant to all of these measures. Andrei Gromyko proposes a counter-plan which, like the Baruch Plan, prohibits the manufacture and use of atomic weaponry. However, it requires full and immediate disarmament by the United States before anything else would go into effect, and excludes any international role in inspection or enforcement: states would self-regulate on this front.

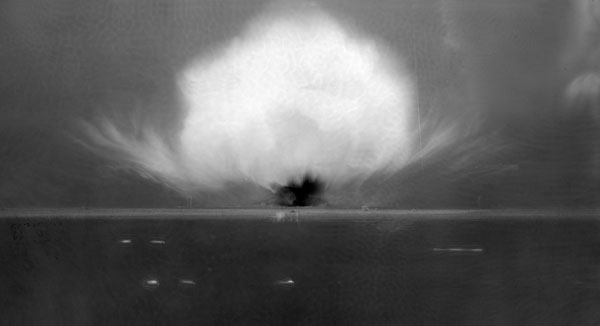

July: The first postwar nuclear test series, Operation Crossroads, begins in the Bikini Atoll, Marshall Islands. Now this is a curious event. Ostensibly the United States was in favor of getting rid of nuclear weapons, and in fact had not yet finalized its domestic legislation about the bomb. But at the same time, it planned to set off three of them, to see their effect on naval vessels. (They decided to only set off two, in the end.) The bombs were themselves still secret, of course, but it was decided that this event should be open to the world and its press. Even the Soviets were invited! As one contemporary report summed up:

July: The first postwar nuclear test series, Operation Crossroads, begins in the Bikini Atoll, Marshall Islands. Now this is a curious event. Ostensibly the United States was in favor of getting rid of nuclear weapons, and in fact had not yet finalized its domestic legislation about the bomb. But at the same time, it planned to set off three of them, to see their effect on naval vessels. (They decided to only set off two, in the end.) The bombs were themselves still secret, of course, but it was decided that this event should be open to the world and its press. Even the Soviets were invited! As one contemporary report summed up:

September: Baruch tells Truman that international control of atomic energy seems nowhere in sight. The Soviet situation has soured dramatically over the course of the year. The Soviets’ international control plan, the Gromyko Plan, requires full faith in Stalin’s willingness to self-regulate. Stalin, for his part, is not willing to sign a pledge of disarmament and inspection while the United States is continuing to build nuclear weapons. It is clear to Baruch, and even to more liberal-minded observers like Oppenheimer, that the Soviets are probably not going to play ball on any of this, because it would not only require them to forswear a potentially important weapon, but because any true plan would require them to become a much more open society.

September: Baruch tells Truman that international control of atomic energy seems nowhere in sight. The Soviet situation has soured dramatically over the course of the year. The Soviets’ international control plan, the Gromyko Plan, requires full faith in Stalin’s willingness to self-regulate. Stalin, for his part, is not willing to sign a pledge of disarmament and inspection while the United States is continuing to build nuclear weapons. It is clear to Baruch, and even to more liberal-minded observers like Oppenheimer, that the Soviets are probably not going to play ball on any of this, because it would not only require them to forswear a potentially important weapon, but because any true plan would require them to become a much more open society.

October: Truman appoints David Lilienthal as the Chairman of the Atomic Energy Commission. Lilienthal is enthusiastic about the job — a New Deal technocrat, he thinks that he can use his position to set up a fairly liberal approach to nuclear technology in the United States. He is quickly confronted by the fact that the atomic empire established by the Manhattan Engineer District has decayed appreciably in year after the end of the war, and that he has powerful enemies in Congress and in the military. His confirmation hearings start in early 1947, and are exceptionally acrimonious. I love Lilienthal as an historical figure, because he is an idealist who really wants to accomplish good things, but ends up doing almost the opposite of what he set out to do. To me this says a lot about the human condition.

November: The US Atomic Energy Commission meets for the first time in Oak Ridge, Tennessee. They adopt the declassification system of the Manhattan District, among other administrative matters.

December: Meredith Gardner, a cryptanalyst for the US Army Signal Intelligence Service, achieves a major breakthrough in decrypting wartime Soviet cables. A cable from 1944 contains a list of scientists working at Los Alamos — indications of a serious breach in wartime atomic security, potentially much worse than the Canadian spy ring. This information is kept extremely secret, however, as this work becomes a major component in the VENONA project, which (years later) leads to the discovery of Klaus Fuchs, Julius Rosenberg, and many other Soviet spies.

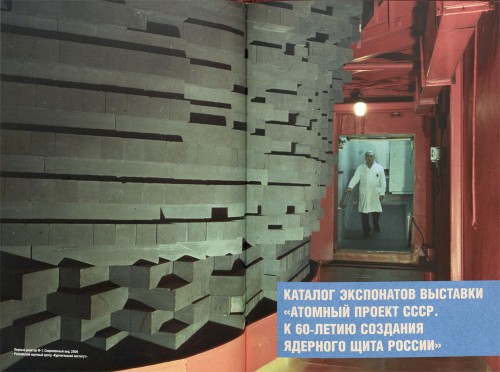

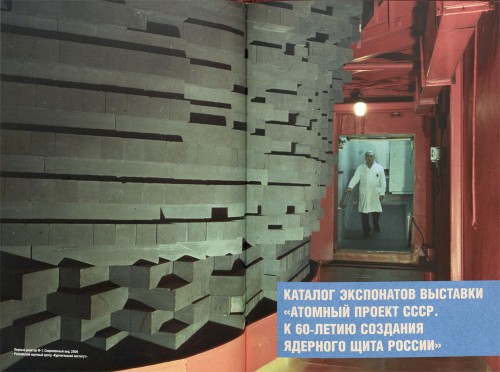

On Christmas Day, 1946, the Soviet Union’s first experimental reactor, F-1, goes critical for the first time.

No single event on that list stands out as on par with Hiroshima, the Cuban Missile Crisis, or even the Berlin Crisis. But taken together, I think, the list makes a strong argument for the importance of 1946. When one reads the documents from this period, one gets this sense of a world in flux. On the one hand, you have people who are hoping that the re-ordering of the world after World War II will present an enormous opportunity for creating a more peaceful existence. The ideas of world government, of the banning of nuclear weapons, of openness and prosperity, seem seriously on the table. And not just by members of the liberal elite, mind you: even US Army Generals were supporting these kinds of positions! And yet, as the year wore on, the hopes began to fade. Harsher analysis began to prevail. Even the most optimistic observers started to see that the problems of the old order weren’t going away anytime soon, that no amount of good faith was going to get Stalin to play ball. Which is, I should say, not to put all of the onus on the Soviets, as intractable as they were, and as awful as Stalin was. One can imagine a Cold War that was less tense, less explicitly antagonistic, less dangerous, even with limitations that the existence of a ruler like Stalin imposed. But some of the more hopeful things seem, with reflection, like pure fantasy. This is Stalin we’re talking about, after all. Roosevelt might have been able to sweet talk him for awhile, but even that had its limits.

No single event on that list stands out as on par with Hiroshima, the Cuban Missile Crisis, or even the Berlin Crisis. But taken together, I think, the list makes a strong argument for the importance of 1946. When one reads the documents from this period, one gets this sense of a world in flux. On the one hand, you have people who are hoping that the re-ordering of the world after World War II will present an enormous opportunity for creating a more peaceful existence. The ideas of world government, of the banning of nuclear weapons, of openness and prosperity, seem seriously on the table. And not just by members of the liberal elite, mind you: even US Army Generals were supporting these kinds of positions! And yet, as the year wore on, the hopes began to fade. Harsher analysis began to prevail. Even the most optimistic observers started to see that the problems of the old order weren’t going away anytime soon, that no amount of good faith was going to get Stalin to play ball. Which is, I should say, not to put all of the onus on the Soviets, as intractable as they were, and as awful as Stalin was. One can imagine a Cold War that was less tense, less explicitly antagonistic, less dangerous, even with limitations that the existence of a ruler like Stalin imposed. But some of the more hopeful things seem, with reflection, like pure fantasy. This is Stalin we’re talking about, after all. Roosevelt might have been able to sweet talk him for awhile, but even that had its limits.

We now know, of course, that the Soviet Union was furiously trying to build its own atomic arsenal in secret during this entire period. We also know that the US military was explicitly expecting to rely on atomic weapons in any future conflict, in order to offset the massive Soviet conventional advantage that existed at the time. We know that there was extensive Soviet espionage in the US government and its atomic program, although not as extensive as fantasists like McCarthy thought. We also know, through hard experience, that questions of treaty violations and inspections didn’t go away over time — if anything, I think, the experience of the Nuclear Non-Proliferation Treaty has shown that many of Baruch’s controversial changes to the Acheson-Lilienthal Report were pretty astute, and quickly got to the center of the political difficulties that all arms control efforts present.

As an historian, I love periods of flux and of change. (As an individual, I know that living in “interesting times” can be pretty stressful!) I love looking at where old orders break down, and new orders emerge. The immediate postwar is one such period — where ideas were earnestly discussed that seemed utterly impossible only a few years later. Such periods provide little windows into “what might have been,” alternative futures and possibilities that never happened, while also reminding us of the forces that bent things to the path they eventually went on.

But one of my favorite historical years is 1946. It’s easy to overlook — while there are some important individual events that happen, none of them are as cataclysmic as some of the events of the aforementioned years, or even some of the other important big years. But, as I was reminded last week while going through some of the papers of David Lilienthal and Bernard Baruch that were in the Princeton University archives, 1946 was something special in and of itself. It is not the big events that define 1946, but the fact that it was a liminal year, a transition period between two orders. For policymakers in the United States, 1946 was when the question of “what will the country’s attitude towards the bomb be?” was still completely up for grabs, but over the course of the year, things became more set in stone.

1946 was a brief period when anything seemed possible. When nothing had yet calcified. The postwar situation was still fluid, and the American approach towards the bomb still unclear.

Part of the reason for this is because things went a little off the rails in 1945. The bombs were dropped, the war had ended, people were pretty happy about all of that. General Groves et al. assumed that Congress would basically take their recommendations for how the bomb should be regarded in the postwar (by passing the May-Johnson Bill, which military lawyers, with help from Vannevar Bush and James Conant, drafted in the final weeks of World War II). At first, it looked like this was going to happen — after all, didn’t Groves “succeed” during the war? But in the waning months of 1945, this consensus rapidly deteriorated. The atomic scientists on the Manhattan Project who had been dissatisfied with the Army turned out to make a formidable lobby, and they found allies amongst a number of Senators. Most important of these was first-term Senator Brien McMahon, who quickly saw an opportunity to jump into the limelight by making atomic energy his issue. By the end of the year, not only did Congressional support fall flat for the Army’s Bill, but even Truman had withdrawn support for it. In its place, McMahon suggested a bill that looked like something the scientists would have written — a much freer, less secret, civilian-run plan for atomic energy.

So what happened in 1946? Let’s just jot off a few of the big things I have in mind.

January: The United Nations meets for the first time. Kind of a big deal. The UN Atomic Energy Commission is created to sort out questions about the future of nuclear technology on a global scale. Hearings on the McMahon Bill continue in Congress through February.

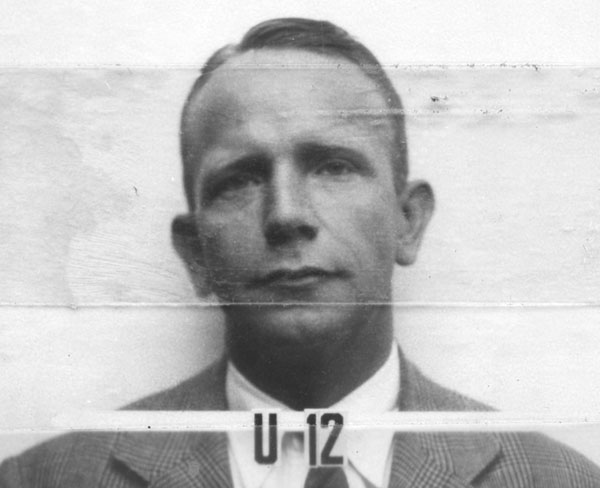

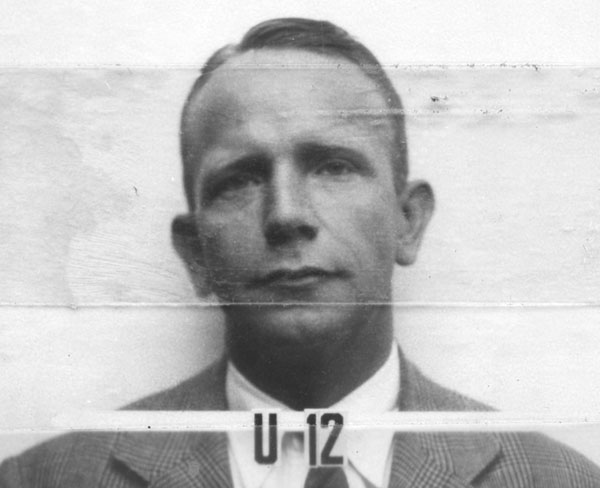

Igor Gouzenko (masked) promoting a novel in 1954. The mask was to help him maintain his anonymity, but you have to admit it adds a wonderfully surreal and theatrical aspect to the whole thing.

Also in February, George F. Kennan sends his famous “Long Telegram” from Moscow, arguing that the Soviet Union sees itself in essential, permanent conflict with the West and is not likely to liberalize anytime soon. Kennan argues that containment of the USSR through “strong resistance” is the only viable course for the United States.

March: The Manhattan Engineer District’s Declassification Organization starts full operation. Groves had asked the top Manhattan Project scientists to come up with the first declassification rules in November 1945, when he realized that Congress wasn’t going to be passing legislation as soon as he expected. They came up with the first declassification procedures and the first declassification guides, inaugurating the first systematic approach to deciding what was secret and what was not.

Lilienthal’s own copy of the mass-market edition of the Acheson-Lilienthal Report, from the Princeton University Archives.

April: The McMahon Act gets substantial modifications while in committee, including the creation of a Military Liaison Committee (giving the military an official position in the running of the Atomic Energy Commission) and the introduction of a draconian secrecy provision (the “restricted data” concept that this blog takes its name from).

June: The Senate passes the McMahon Act. The House starts to debate it. Several changes are made to the House version of the bill — notably all employees with access to “restricted data” must now be investigated by the FBI and the penalty for misuse or espionage of “restricted data” is increased to death or life imprisonment. Both of these features were kept in the final version submitted to the President for signature in July.

June: Bernard Baruch, Truman’s appointee to head the US delegation of the UN Atomic Energy Commission, presents a modified form of the Acheson-Lilienthal Report to the UNAEC, dubbed the Baruch Plan. Some of the modifications are substantial, and are deeply resented by people like Oppenheimer who see them as torpedoing the plan. The Baruch Plan, for example, considered the question of what to do about violations of the agreement something that needed to be hashed out explicitly and well in advance. It also argued that the United States would not destroy its (still tiny) nuclear stockpile until the Soviet Union had proven it was not trying to build a bomb of their own. It was explicit about the need for full inspections of the USSR — a difficulty in an explicitly closed society — and stripped the UN Security Council of veto power when it came to enforcing violations of the treaty. The Soviets were, perhaps unsurprisingly, resistant to all of these measures. Andrei Gromyko proposes a counter-plan which, like the Baruch Plan, prohibits the manufacture and use of atomic weaponry. However, it requires full and immediate disarmament by the United States before anything else would go into effect, and excludes any international role in inspection or enforcement: states would self-regulate on this front.

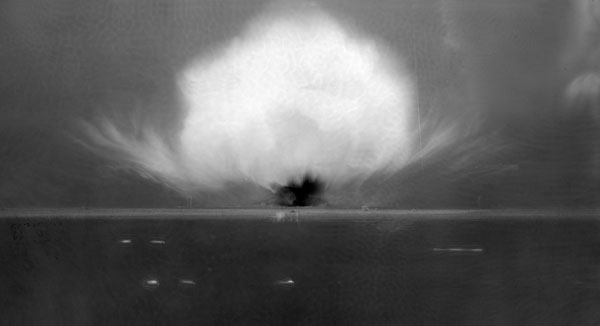

Shot “Baker” of Operation Crossroads — one of the more famous mushroom clouds of all time. Note that the mushroom cloud itself is not the wide cloud you see there (which is a brief condensation cloud caused by it being an underwater detonation), but is the more bulbous cloud you see peaking out of the top of that cloud. You can see the battleships used for target practice near base of the cloud. The dark mark on the right side of the stem may be an upturned USS Arkansas.

The unique nature of the operation was inherent not only in its huge size — the huge numbers of participating personnel, and the huge amounts of test equipment and number of instruments involved — it was inherent also in the tremendous glare of publicity to which the tests were exposed, and above all the the extraordinary fact that the weapons whose performance was exposed to this publicity were still classified, secret, weapons, which had never even been seen except by a few men in the inner circles of the Manhattan District and by those who had assisted in the three previous atomic bomb detonations. It has been truly said that the operation was “the most observed, most photographed, most talked-of scientific test ever conducted.” Paradoxically, it may also be said that it was the most publicly advertised secret test ever conducted.1August: Truman signs the McMahon Act into law, and it becomes the Atomic Energy Act of 1946. It stipulates that a five-person Atomic Energy Commission will run all of the nation’s domestic atomic energy affairs, and while half of the law retains the “free and open” approach of the early McMahon Act, the other half has a very conservative and restrictive flavor to it, promising death and imprisonment to anyone who betrays atomic secrets. The paradox is explicit, McMahon explained at the time, because finding a way to implement policy between those two extremes would produce rational discussion. Right. Did I mention he was a first-term Senator? The Atomic Energy Commission would take over from the Manhattan Engineer District starting in 1947.

A meeting of the UN Atomic Energy Commission in October 1946. At front left, speaking, is Andrei Gromyko. Bernard Baruch is the white-haired man sitting at the table at right behind the “U.S.A” plaque. At far top-right of the photo is a pensive J. Robert Oppenheimer. Two people above Baruch, in the very back, is a bored-looking General Groves. Directly below Groves is Manhattan Project scientist Richard Tolman. British physicist James Chadwick sits directly behind the U.K. representative at the table.

October: Truman appoints David Lilienthal as the Chairman of the Atomic Energy Commission. Lilienthal is enthusiastic about the job — a New Deal technocrat, he thinks that he can use his position to set up a fairly liberal approach to nuclear technology in the United States. He is quickly confronted by the fact that the atomic empire established by the Manhattan Engineer District has decayed appreciably in year after the end of the war, and that he has powerful enemies in Congress and in the military. His confirmation hearings start in early 1947, and are exceptionally acrimonious. I love Lilienthal as an historical figure, because he is an idealist who really wants to accomplish good things, but ends up doing almost the opposite of what he set out to do. To me this says a lot about the human condition.

November: The US Atomic Energy Commission meets for the first time in Oak Ridge, Tennessee. They adopt the declassification system of the Manhattan District, among other administrative matters.

December: Meredith Gardner, a cryptanalyst for the US Army Signal Intelligence Service, achieves a major breakthrough in decrypting wartime Soviet cables. A cable from 1944 contains a list of scientists working at Los Alamos — indications of a serious breach in wartime atomic security, potentially much worse than the Canadian spy ring. This information is kept extremely secret, however, as this work becomes a major component in the VENONA project, which (years later) leads to the discovery of Klaus Fuchs, Julius Rosenberg, and many other Soviet spies.

On Christmas Day, 1946, the Soviet Union’s first experimental reactor, F-1, goes critical for the first time.

The Soviet F-1 reactor, in 2009. It remains operational today — the longest-lived nuclear reactor by far.

We now know, of course, that the Soviet Union was furiously trying to build its own atomic arsenal in secret during this entire period. We also know that the US military was explicitly expecting to rely on atomic weapons in any future conflict, in order to offset the massive Soviet conventional advantage that existed at the time. We know that there was extensive Soviet espionage in the US government and its atomic program, although not as extensive as fantasists like McCarthy thought. We also know, through hard experience, that questions of treaty violations and inspections didn’t go away over time — if anything, I think, the experience of the Nuclear Non-Proliferation Treaty has shown that many of Baruch’s controversial changes to the Acheson-Lilienthal Report were pretty astute, and quickly got to the center of the political difficulties that all arms control efforts present.

As an historian, I love periods of flux and of change. (As an individual, I know that living in “interesting times” can be pretty stressful!) I love looking at where old orders break down, and new orders emerge. The immediate postwar is one such period — where ideas were earnestly discussed that seemed utterly impossible only a few years later. Such periods provide little windows into “what might have been,” alternative futures and possibilities that never happened, while also reminding us of the forces that bent things to the path they eventually went on.

Notes

- Manhattan District History, Book VIII, Los Alamos Project (Y) – Volume 3, Auxiliary Activities, Chapter 8, Operation Crossroads (n.d., ca. 1946). [↩]

News and Notes

We no longer live in the nuclear age, or, at least, we don’t think we do — so I concluded awhile back. But that won’t stop me from talking about it! This Wednesday, September 11th, 2013, I will be participating in a live webcast at the Chemical Heritage Foundation in Philadelphia:

Update: The video has been posted online, enjoy!

Webcast: “What’s become of our nuclear golden age?”

Monday, September 9th, 2013

We no longer live in the nuclear age, or, at least, we don’t think we do — so I concluded awhile back. But that won’t stop me from talking about it! This Wednesday, September 11th, 2013, I will be participating in a live webcast at the Chemical Heritage Foundation in Philadelphia:

On Sept. 11, 2013 the Chemical Heritage Foundation will present a live online video discussion, “Power and Promise: What’s become of our nuclear golden age?” Guests Alex Wellerstein and Linda Richards will take stock of our turbulent nuclear past and look at how it has shaped our current attitudes, for better and for worse.This should be a fun thing, as Linda and I take somewhat different approaches (both interesting) to many nuclear issues, and the CHF team asks great questions. You can Tweet in questions for the show with the right hashtag (#HistChem) and it may somehow magically get to us while we’re talking. And hey, I’ll be wearing a suit!

Some say we are on the verge of a bright nuclear future in which nuclear power will play a major role in responding to climate change. Others say that we should expect more Fukushimas. Whichever way our nuclear future goes, there will be energy and environmental tradeoffs. On CHF’s blog you can decide on the tradeoffs you are willing to make. Tweet to vote your choices. Viewers can also tweet questions to the guests before or during the show by using the hashtag #HistChem.

“Power and Promise: What’s Become of Our Nuclear Golden Age?” will air at 6 p.m. EST. Watch the livecast episode at www.chemheritage.org/live.

Guest Bios:

Alex Wellerstein is an associate historian at the Center for History of Physics at the American Institute of Physics. He holds a Ph.D. in the history of science from Harvard University and his research interests include the history of Cold War technology, including nuclear technology. He blogs at http://blog.nuclearsecrecy.com/.

Linda M. Richards is a former CHF fellow and will be returning in 2014 as a Doan Fellow. She is working on a Ph.D. on nuclear history at Oregon State University. Her dissertation is titled “Rocks and Reactors: The Origins of Radiation Exposure Disparity, 1941-1979.” In 2012 she received a National Science Foundation grant that took her to the International Atomic Energy Agency (IAEA) in Vienna, UN agencies and archives in Geneva, and to North American indigenous uranium mining sites.

About the Show:

#HistChem is a monthly interactive livestreamed show produced by the Chemical Heritage Foundation. It features topically compelling issues that intersect science, history and culture. Hosts are Michal Meyer, editor of Chemical Heritage Magazine, and Bob Kenworthy, a CHF staff member and chemist. The first episode, “How We Learned to Stop Worrying and Love the Zombie Apocalypse,” debuted in August, 2013. Follow the show and related news at chemheritage.org/media

About the Chemical Heritage Foundation:

The Chemical Heritage Foundation is a collections-based nonprofit organization that preserves the history and heritage of chemistry, chemical engineering, and related sciences and technologies. The collections are used to create a body of original scholarship that illuminates chemistry’s role in shaping society. In bridging science with the humanities, arts, and social sciences, CHF is committed to building a vibrant, international community of scholars; creating a rich source of traditional and emerging media; expanding the reach of our museum; and engaging the broader society through inventive public events.

Update: The video has been posted online, enjoy!

Meditations | Visions

What the NUKEMAP taught me about fallout

Friday, August 2nd, 2013One of the most technically difficult aspects of the new NUKEMAP was the fallout generation code. I know that in practice it looks like just a bunch of not-too-complicated ellipses, but finding a fallout code that would provide what I considered to be necessary flexibility proved to be a very long search indeed. I had started working on it sometime in 2012, got frustrated, returned to it periodically, got frustrated again, and finally found the model I eventually used — Carl Miller’s Simplified Fallout Scaling System — only a few months ago.

The fallout model used is what is known as a “scaling” model. This is in contrast with what Miller terms a “mathematical” model, which is a much more complicated beast. A scaling model lets you input only a few simple parameters (e.g. warhead yield, fission fraction, and wind speed) and the output are the kinds of idealized contours seen in the NUKEMAP. This model, obviously, doesn’t quite look like the complexities of real life, but as a rough indication of the type of radioactive contamination expected, and over what kind of area, it has its uses. The mathematical model is the sort that requires much more complicated wind parameters (such as the various wind speeds and sheers at different altitudes) and tries to do something that looks more “realistic.”

The mathematical models are harder to get ahold of (the government has a few of them, but they don’t release them to non-government types like me) and require more computational power (so instead of running in less than a second, they require several minutes even on a modern machine). If I had one, I would probably try to implement it, but I don’t totally regret using the scaling model. In terms of communicating both the general technical point about fallout, and in the fact that this is an idealized model, it does very well. I would prefer people to look at a model and have no illusions that it is, indeed, just a model, as opposed to some kind of simulation whose slickness might engender false confidence.

Working on the fallout model, though, made me realize how little I really understood about nuclear fallout. I mean, my general understanding was still right, but I had a few subtle-but-important revelations that changed the way I thought about nuclear exchanges in general.

Working on the fallout model, though, made me realize how little I really understood about nuclear fallout. I mean, my general understanding was still right, but I had a few subtle-but-important revelations that changed the way I thought about nuclear exchanges in general.

The most important one is that fallout is primary a product of surface bursts. That is, the chief determinant as to whether there is local fallout or not is whether the nuclear fireball touches the ground. Airbursts where the fireball doesn’t touch the ground don’t really produce fallout worth talking about — even if they are very large.

I read this in numerous fallout models and effects books and thought, can this be right? What’s the ground got to do with it? A whole lot, apparently. The nuclear fireball is full of highly-radioactive fission products. For airbursts, the cloud goes pretty much straight up and those particles are light enough and hot enough that they pretty much just hang out at the top of the cloud. By the time they start to cool and drag enough to “fall out” of the cloud, they have diffused themselves in the atmosphere and also decayed quite a bit.1 So they are basically not an issue for people on the ground — you end up with exposures in the tenths or hundreds of rads, which isn’t exactly nothing but is pretty low. This is more or less what they found at Hiroshima and Nagasaki — there were a few places where fallout had deposited, but it was extremely limited and very low radiation, as you’d expect with those two airbursts.

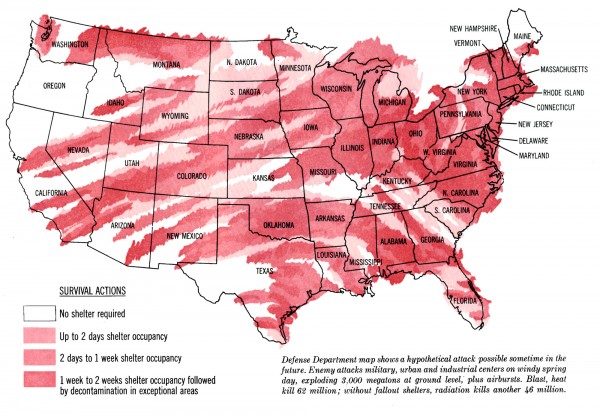

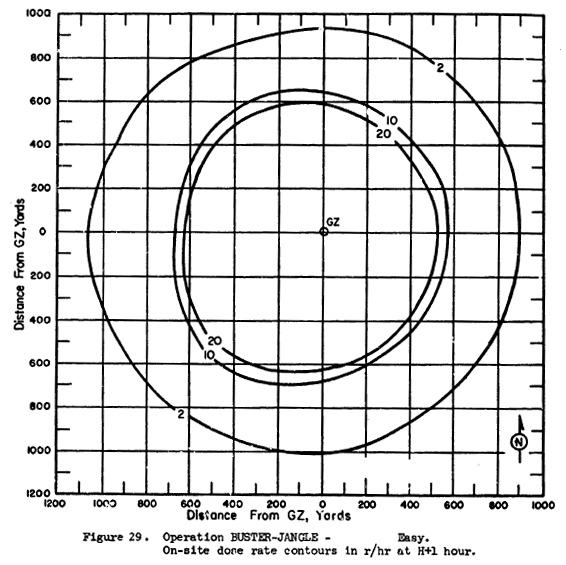

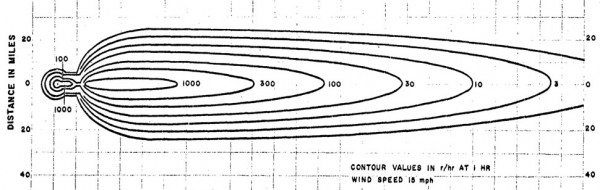

I thought this might be simplifying things a bit, so I looked up the fallout patterns for airbursts. And you know what? It seems to be correct. The radiation pattern you get from a “nominal” fission airburst looks more or less like this:

That’s not zero radiation, but as you can see it is very, very local, and relatively limited. The radiation deposited is about the same range as the acute effects of the bomb itself, as opposed to something that affects people miles downwind.2

That’s not zero radiation, but as you can see it is very, very local, and relatively limited. The radiation deposited is about the same range as the acute effects of the bomb itself, as opposed to something that affects people miles downwind.2

What about very large nuclear weapons? The only obvious US test that fit the bill here was Redwing Cherokee, from 1956. This was the first thermonuclear airdrop by the USA, and it had a total yield of 3.8 megatons — nothing to sniff at, and a fairly high percentage of it (at least 50%) from fission. But, sure enough, appears to have been basically no fallout pattern as a result. A survey meter some 100 miles from ground-zero picked up a two-hour peak of .25 millirems per hour some 10 hours later — which is really nothing to worry about. The final report on the test series concluded that Cherokee produced “no fallout of military significance” (all the more impressive given how “dirty” many of the other tests in that series were). Again, not truly zero radiation, but pretty close to it, and all the more impressive given the megatonnage involved.3

The case of the surface burst is really quite different. When the fireball touches the ground, it ends up mixing the fission products with dirt and debris. (Or, in the case of testing in the Marshall Islands, coral.) The dirt and debris breaks into fine chunks, but it is heavy. These heavier particles fall out of the cloud very quickly, starting at about an hour after detonation and then continuing for the next 96 hours or so. And as they fall out, they are both attached to the nasty fission products and have other induced radioactivity as well. This is the fallout we’re used to from the big H-bomb tests in the Pacific (multi-megaton surface bursts on coral atolls was the worst possible combination possible for fallout) and even the smaller surface bursts in Nevada.

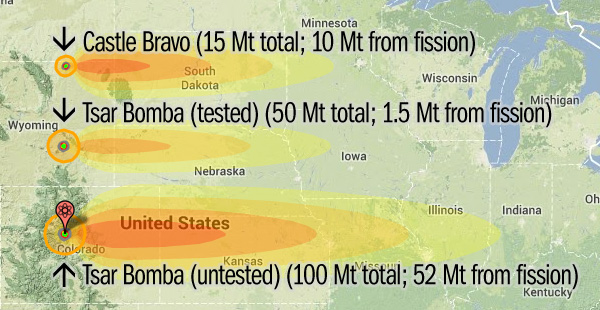

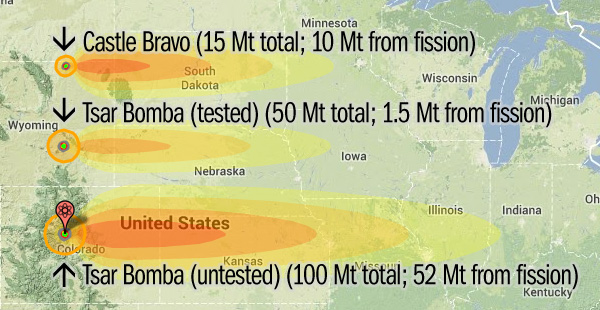

The other thing the new model helped me appreciate more is exactly how much the fission fraction matters. The fission fraction is the amount of the total yield that is derived from fission, as opposed to fusion. Fission is the only reaction that produces highly-radioactive byproducts. Fusion reactions produce neutrons, which are a definite short-term threat, but not so much a long-term concern. Obviously all “atomic” or fission bombs have a fission fraction of 100%, but for thermonuclear weapons it can vary quite a bit. I’ve talked about this in a recent post, so I won’t go into detail here, but just emphasize that it was unintuitive to me that the 50 Mt Tsar Bomba, had it been a surface burst, would have had much less fallout than the 15 Mt Castle Bravo shot, because the latter had some 67% of its energy derived from fission while the former had only 3%. Playing with the NUKEMAP makes this fairly clear:

The real relevance of all of this for understanding nuclear war is fairly important. Weapons that are designed to flatten cities, perhaps surprisingly, don’t really pose as much of a long-term fallout hazard. The reason for this is that the ideal burst height for such a weapon is usually set to maximize the 10 psi pressure radius, and that is always fairly high above the ground. (The maximum radius for a pressure wave is somewhat unintuitive because it relies on how the wave will be reflected on the ground. So it doesn’t produce a straightforward curve.) Bad for the people in the cities themselves, to be sure, but not such a problem for those downwind.

The real relevance of all of this for understanding nuclear war is fairly important. Weapons that are designed to flatten cities, perhaps surprisingly, don’t really pose as much of a long-term fallout hazard. The reason for this is that the ideal burst height for such a weapon is usually set to maximize the 10 psi pressure radius, and that is always fairly high above the ground. (The maximum radius for a pressure wave is somewhat unintuitive because it relies on how the wave will be reflected on the ground. So it doesn’t produce a straightforward curve.) Bad for the people in the cities themselves, to be sure, but not such a problem for those downwind.

But weapons that are designed to destroy command bunkers, or missiles in silos, are the worst for the surrounding civilian populations. This is because such weapons are designed to penetrate the ground, and the fireballs necessarily come into contact with the dirt and debris. As a result, they kick up the worst sort of fallout that can stretch many hundreds of miles downwind.

So it’s sort of a damned-if-you-do, damned-if-you-don’t sort of situation when it comes to nuclear targeting. If you try to do the humane thing by only targeting counterforce targets, you end up producing the worst sort of long-range, long-term radioactive hazard. The only way to avoid that is to target cities — which isn’t exactly humane either. (And, of course, the idealized terrorist nuclear weapon manages to combine the worst aspects of both: targeting civilians and kicking up a lot of fallout, for lack of a better delivery vehicle.)

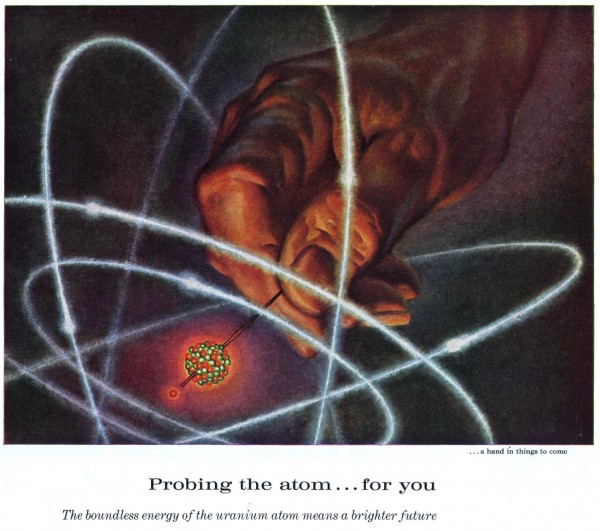

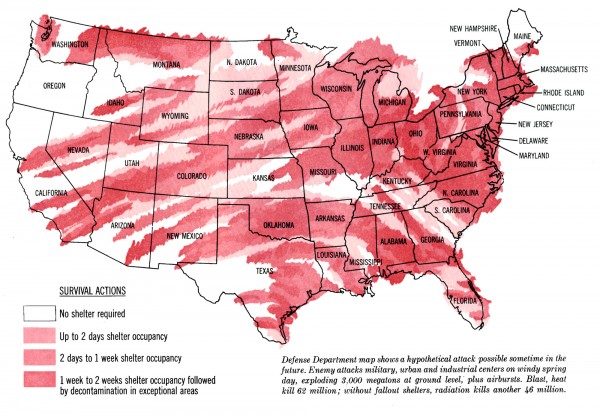

And it is worth noting: fallout mitigation is one of those areas were Civil Defense is worth paying attention to. You can’t avoid all contamination by staying in a fallout shelter for a few days, but you can avoid the worst, most acute aspects of it. This is what the Department of Homeland Security has been trying to convince people of, regarding a possible terrorist nuclear weapon. They estimate that hundreds of thousands of lives could be saved in such an event, if people understood fallout better and acted upon it. But the level of actual compliance with such recommendations (stay put, don’t flee immediately) seems like it would be rather low to me.

And it is worth noting: fallout mitigation is one of those areas were Civil Defense is worth paying attention to. You can’t avoid all contamination by staying in a fallout shelter for a few days, but you can avoid the worst, most acute aspects of it. This is what the Department of Homeland Security has been trying to convince people of, regarding a possible terrorist nuclear weapon. They estimate that hundreds of thousands of lives could be saved in such an event, if people understood fallout better and acted upon it. But the level of actual compliance with such recommendations (stay put, don’t flee immediately) seems like it would be rather low to me.

In some sense, this made me feel even worse about fallout than I had before. Prior to playing around with the details, I’d assumed that fallout was just a regular result of such weapons. But now I see it more as underscoring the damnable irony of the bomb: that all of the choices it offers up to you are bad ones.

The fallout model used is what is known as a “scaling” model. This is in contrast with what Miller terms a “mathematical” model, which is a much more complicated beast. A scaling model lets you input only a few simple parameters (e.g. warhead yield, fission fraction, and wind speed) and the output are the kinds of idealized contours seen in the NUKEMAP. This model, obviously, doesn’t quite look like the complexities of real life, but as a rough indication of the type of radioactive contamination expected, and over what kind of area, it has its uses. The mathematical model is the sort that requires much more complicated wind parameters (such as the various wind speeds and sheers at different altitudes) and tries to do something that looks more “realistic.”

The mathematical models are harder to get ahold of (the government has a few of them, but they don’t release them to non-government types like me) and require more computational power (so instead of running in less than a second, they require several minutes even on a modern machine). If I had one, I would probably try to implement it, but I don’t totally regret using the scaling model. In terms of communicating both the general technical point about fallout, and in the fact that this is an idealized model, it does very well. I would prefer people to look at a model and have no illusions that it is, indeed, just a model, as opposed to some kind of simulation whose slickness might engender false confidence.

Fallout from a total nuclear exchange, in watercolors. From the Saturday Evening Post, March 23, 1963. Click to zoom.

The most important one is that fallout is primary a product of surface bursts. That is, the chief determinant as to whether there is local fallout or not is whether the nuclear fireball touches the ground. Airbursts where the fireball doesn’t touch the ground don’t really produce fallout worth talking about — even if they are very large.

I read this in numerous fallout models and effects books and thought, can this be right? What’s the ground got to do with it? A whole lot, apparently. The nuclear fireball is full of highly-radioactive fission products. For airbursts, the cloud goes pretty much straight up and those particles are light enough and hot enough that they pretty much just hang out at the top of the cloud. By the time they start to cool and drag enough to “fall out” of the cloud, they have diffused themselves in the atmosphere and also decayed quite a bit.1 So they are basically not an issue for people on the ground — you end up with exposures in the tenths or hundreds of rads, which isn’t exactly nothing but is pretty low. This is more or less what they found at Hiroshima and Nagasaki — there were a few places where fallout had deposited, but it was extremely limited and very low radiation, as you’d expect with those two airbursts.

I thought this might be simplifying things a bit, so I looked up the fallout patterns for airbursts. And you know what? It seems to be correct. The radiation pattern you get from a “nominal” fission airburst looks more or less like this:

The on-side dose rate contours for the Buster-Jangle “Easy” shot (31 kilotons), in rads per hour. Notice that barely any radiation goes further than 1,100 yards from ground zero, and that even that is very low level (2 rads/hr). Source.

What about very large nuclear weapons? The only obvious US test that fit the bill here was Redwing Cherokee, from 1956. This was the first thermonuclear airdrop by the USA, and it had a total yield of 3.8 megatons — nothing to sniff at, and a fairly high percentage of it (at least 50%) from fission. But, sure enough, appears to have been basically no fallout pattern as a result. A survey meter some 100 miles from ground-zero picked up a two-hour peak of .25 millirems per hour some 10 hours later — which is really nothing to worry about. The final report on the test series concluded that Cherokee produced “no fallout of military significance” (all the more impressive given how “dirty” many of the other tests in that series were). Again, not truly zero radiation, but pretty close to it, and all the more impressive given the megatonnage involved.3

The case of the surface burst is really quite different. When the fireball touches the ground, it ends up mixing the fission products with dirt and debris. (Or, in the case of testing in the Marshall Islands, coral.) The dirt and debris breaks into fine chunks, but it is heavy. These heavier particles fall out of the cloud very quickly, starting at about an hour after detonation and then continuing for the next 96 hours or so. And as they fall out, they are both attached to the nasty fission products and have other induced radioactivity as well. This is the fallout we’re used to from the big H-bomb tests in the Pacific (multi-megaton surface bursts on coral atolls was the worst possible combination possible for fallout) and even the smaller surface bursts in Nevada.

The other thing the new model helped me appreciate more is exactly how much the fission fraction matters. The fission fraction is the amount of the total yield that is derived from fission, as opposed to fusion. Fission is the only reaction that produces highly-radioactive byproducts. Fusion reactions produce neutrons, which are a definite short-term threat, but not so much a long-term concern. Obviously all “atomic” or fission bombs have a fission fraction of 100%, but for thermonuclear weapons it can vary quite a bit. I’ve talked about this in a recent post, so I won’t go into detail here, but just emphasize that it was unintuitive to me that the 50 Mt Tsar Bomba, had it been a surface burst, would have had much less fallout than the 15 Mt Castle Bravo shot, because the latter had some 67% of its energy derived from fission while the former had only 3%. Playing with the NUKEMAP makes this fairly clear:

The darkest orange here corresponds to 1,000 rads/hr (a deadly dose); the slightly darker orange is 100 rads/hr (an unsafe dose); the next lighter orange is 10 rads/hr (ill-advised), the lightest yellow is 1 rad/hr (not such a big deal). So the 50 Mt Tsar Bomba is entirely within the “unsafe” range, as compared to the large “deadly” areas of the other two. Background location chosen only for scale!

But weapons that are designed to destroy command bunkers, or missiles in silos, are the worst for the surrounding civilian populations. This is because such weapons are designed to penetrate the ground, and the fireballs necessarily come into contact with the dirt and debris. As a result, they kick up the worst sort of fallout that can stretch many hundreds of miles downwind.

So it’s sort of a damned-if-you-do, damned-if-you-don’t sort of situation when it comes to nuclear targeting. If you try to do the humane thing by only targeting counterforce targets, you end up producing the worst sort of long-range, long-term radioactive hazard. The only way to avoid that is to target cities — which isn’t exactly humane either. (And, of course, the idealized terrorist nuclear weapon manages to combine the worst aspects of both: targeting civilians and kicking up a lot of fallout, for lack of a better delivery vehicle.)

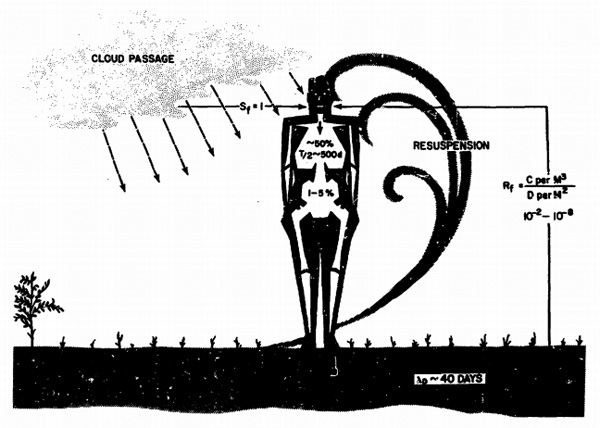

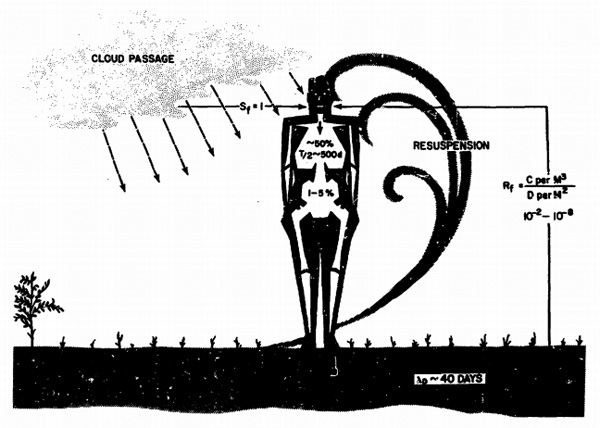

A rather wonderful 1970s fallout exposure diagram. Source.

In some sense, this made me feel even worse about fallout than I had before. Prior to playing around with the details, I’d assumed that fallout was just a regular result of such weapons. But now I see it more as underscoring the damnable irony of the bomb: that all of the choices it offers up to you are bad ones.

Notes

- Blasts low enough to form a stem do suck up some dirt into the cloud, but it happens later in the detonation when the fission products have cooled and condensed a bit, and so doesn’t matter as much. [↩]

- Underwater surface bursts, like Crossroads Baker, have their own characteristics, because the water seems to cause the fallout to come down almost immediately. So the distances are not too different from the airburst pattern here — that is, very local — but the contours are much, much more radioactive. [↩]

- Why didn’t they test more of these big bombs as airdrops, then? Because their priority was on the experimentation and instrumentation, not the fallout. Airbursts were more logistically tricky, in other words, and were harder to get data from. Chew on that one a bit… [↩]

Visions

The bomb and its makers

Tuesday, July 30th, 2013In part of the “make this blog actually work again” campaign, I’ve changed some things on the backend which required me to change the blog url from http://nuclearsecrecy.com/blog/ to http://blog.nuclearsecrecy.com/. Fortunately, even if you don’t update your bookmarks, the old links should all still work automatically. It seems to be working a lot better at the moment — in the sense that I can once again edit the blog — so that’s something!

In all of the new NUKEMAP fuss, and the fact that my blog kept crashing, I didn’t get a chance to mention that I had two multimedia essays up on the website of The Bulletin of the Atomic Scientists. I’m pretty happy with both of these, both visually and in terms of the text.

The first was published a few weeks ago, and was related to my much earlier post relating to the badge photographs at Los Alamos. “The faces that made the Bomb“ has so far proved to be the one thing I’ve done that people end up bringing up in casual conversation without realizing I wrote it. (The scenario is, I meet someone new, I mention I work on the history of nuclear weapons, they ask me if I’ve seen this thing on the Internet about the badge photographs, I answer that I in fact wrote it, a slight awkwardness follows.)

Some of the badge photographs are the ones that anyone on here would be familiar with — Oppenheimer, Groves, Fuchs, etc. But I enjoyed picking out a few more obscure characters. One of my favorites of these is Charlotte Serber, wife of the physicist and Oppenheimer student Robert Serber. Here’s my micro-essay:

What I like about Charlotte is not only that she highlights that many of the “Los Alamos wives” actually did work that was crucial to the project (and there were scientists amongst the “wives” as well, such as Elizabeth R. Graves, who I also profiled), and that the work of a librarian can be pretty vital (imagine if they didn’t have good organization of their reports, files, and classified information). But I also find Charlotte’s story amazing because of the betrayal: Oppenheimer the friend, Oppenheimer the snitch.

I should note that Oppenheimer’s labeling of Charlotte was probably not meant to be malicious — he was going over lists of people who might have Communist backgrounds when talking to the Manhattan Project security officers. He rattled off a number of names, and even said he thought most of them probably weren’t themselves Communists. This, of course, meant that they got flagged as possible Communists for the rest of their lives. Oppenheimer’s attempt to look loyal to the security system, even his attempts to be benign about it, were terrible failures in the long run, both for him and for his poor friends. Albert Einstein put it well: “The trouble with Oppenheimer is that he loves a woman who doesn’t love him—the United States government.”

The other one I want to highlight on here is that of Kenneth T. Bainbridge. Bainbridge was Harvard physicist and was in charge of organizing Project Trinity, the first test of the atomic bomb in July 1945. It was a big job — bigger, I think, than most people realize. You don’t just throw an atomic bomb on top of a tower in the desert and set it off. It had a pretty large staff, required a ton of theoretical and practical work, and, in the end, was an experiment that, ideally, destroyed itself in the process. Here was my Bainbridge blurb:

Bainbridge makes for a good segue into my other BAS multimedia essay, “The beginning of the Bomb,” which is about the Trinity test and which came out just before the 68th anniversary, which was two weeks ago. It also was somewhat of a reprise of themes I’d first played with on the blog, namely my post on “Trinity’s Cloud.” I’ve been struck that while Trinity was so extensively documented, the same few pictures of it and its explosion are re-used again and again. Basically, if it isn’t one of the “blobs of fire” pictures, or the Jack Aeby early-stage fireball/cloud photograph (the one used on the cover of The Making of the Atomic Bomb), then it doesn’t seem to exist. Among other things related to Trinity, I got to include two of my favorite alternative Trinity photographs.

The first is this ghostly apparition above. What a strange, occult thing the atomic bomb looks like in this view. While most photographs of the bomb are concerned about capturing it at a precise fraction of a second — a nice precursor to the famous Rapatronic photographs of the 1950s — this one does something quite different, and quite unusual. This is a long exposure photograph of several seconds of the explosion. The caption indicates (assuming I am interpreting it correctly) that it is an exposure of several seconds before the explosion and then two seconds after the beginning of the detonation. Which would explain why there are so many pre-blast details available to see.

The result is what you see here: a phantom whose resemblance to the “classic” Trinity explosion pictures is more evocative than definite. And if you view it at full size, you can just make out features of the desert floor: the cables that held up the tower, for example. (Along with some strange, blobby artifacts associated with dark room work.) I somewhat wish this was the image of “the atomic bomb” that we all had in our minds — dark, ghastly, tremendous. Instead of seeing just a moment after the atomic age began, we instead see in a single image the transition between one age and the next.

Most of the photographs of Trinity are of its first few seconds. But this one is not. It may be the only good photograph I have seen of the late-stage Trinity mushroom cloud. It is striking, is it not? A tall, dark column of smoke, lightly mushroomed at the top, with a larger cloud layer above it. “Ominous” is the word I keep coming back to, especially once you know that the cloud in question was highly radioactive.

One of the things I found while researching the behavior of mushroom clouds for the NUKEMAP3D was that while the mushroom cloud is an ubiquitous symbol of the bomb, it is specifically the early-stage mushroom cloud whose photograph gets shown repeatedly. Almost all nuclear detonation photographs are of the first 30 second or so of the explosion, when the mushroom cloud is still quite small, and usually quite bright and mushroomy. The late-stage cloud — about 4-10 minutes, depending on the yield of the bomb — is a much larger, darker, and unpleasant thing.

Why did we so quickly move from thinking of the atomic bomb as a burst of fire into a cloud of smoke? The obvious answer would be Hiroshima and Nagasaki, where we lacked the instrumentation to see the fireball, and only could see the cloud. But I’m still struck that our visions of these things are still so constrained to a few examples, a few moments in time, out of so many other possibilities, each with their own quite different visual associations.

In all of the new NUKEMAP fuss, and the fact that my blog kept crashing, I didn’t get a chance to mention that I had two multimedia essays up on the website of The Bulletin of the Atomic Scientists. I’m pretty happy with both of these, both visually and in terms of the text.

The first was published a few weeks ago, and was related to my much earlier post relating to the badge photographs at Los Alamos. “The faces that made the Bomb“ has so far proved to be the one thing I’ve done that people end up bringing up in casual conversation without realizing I wrote it. (The scenario is, I meet someone new, I mention I work on the history of nuclear weapons, they ask me if I’ve seen this thing on the Internet about the badge photographs, I answer that I in fact wrote it, a slight awkwardness follows.)

Some of the badge photographs are the ones that anyone on here would be familiar with — Oppenheimer, Groves, Fuchs, etc. But I enjoyed picking out a few more obscure characters. One of my favorites of these is Charlotte Serber, wife of the physicist and Oppenheimer student Robert Serber. Here’s my micro-essay:

Charlotte Serber was one of the many wives of the scientists who came to Los Alamos during the war. She was also one of the many wives who had their own substantial jobs while at the lab. While her husband, Robert Serber, worked on the design of the first nuclear weapons, Charlotte was the one in charge of running the technical library. While “librarian” might not at first glance seem vital to the war project, consider J. Robert Oppenheimer’s postwar letter to Serber, thanking her that “no single hour of delay has been attributed by any man in the laboratory to a malfunctioning, either in the Library or in the classified files. To this must be added the fact of the surprising success in controlling and accounting for the mass of classified information, where a single serious slip might not only have caused us the profoundest embarrassment but might have jeopardized the successful completion of our job.” Serber fell under unjustified suspicion of being a Communist in the immediate postwar, and, according to her FBI file, her phones were tapped. Who had singled her out as a possible Communist, because of her left-wing parents? Someone she thought of as a close personal friend: J. Robert Oppenheimer.Charlotte was also the only woman Division Leader at Los Alamos, as the director of the library. She was also the only Division Leader barred from attending the Trinity test — on account of a lack of “facilities” for women there. She considered this a gross injustice.

What I like about Charlotte is not only that she highlights that many of the “Los Alamos wives” actually did work that was crucial to the project (and there were scientists amongst the “wives” as well, such as Elizabeth R. Graves, who I also profiled), and that the work of a librarian can be pretty vital (imagine if they didn’t have good organization of their reports, files, and classified information). But I also find Charlotte’s story amazing because of the betrayal: Oppenheimer the friend, Oppenheimer the snitch.

I should note that Oppenheimer’s labeling of Charlotte was probably not meant to be malicious — he was going over lists of people who might have Communist backgrounds when talking to the Manhattan Project security officers. He rattled off a number of names, and even said he thought most of them probably weren’t themselves Communists. This, of course, meant that they got flagged as possible Communists for the rest of their lives. Oppenheimer’s attempt to look loyal to the security system, even his attempts to be benign about it, were terrible failures in the long run, both for him and for his poor friends. Albert Einstein put it well: “The trouble with Oppenheimer is that he loves a woman who doesn’t love him—the United States government.”

The other one I want to highlight on here is that of Kenneth T. Bainbridge. Bainbridge was Harvard physicist and was in charge of organizing Project Trinity, the first test of the atomic bomb in July 1945. It was a big job — bigger, I think, than most people realize. You don’t just throw an atomic bomb on top of a tower in the desert and set it off. It had a pretty large staff, required a ton of theoretical and practical work, and, in the end, was an experiment that, ideally, destroyed itself in the process. Here was my Bainbridge blurb:

During the Manhattan Project, Harvard physicist Kenneth Bainbridge was in charge of setting up the Trinity test—afterward he became known as the person who famously said: “Now we are all sons of bitches.” Years later he wrote a letter to J. Robert Oppenheimer explaining his choice of words: “I was saying in effect that we had all worked hard to complete a weapon which would shorten the war but posterity would not consider that phase of it and would judge the effort as the creation of an unspeakable weapon by unfeeling people. I was also saying that the weapon was terrible and those who contributed to its development must share in any condemnation of it. Those who object to the language certainly could not have lived at Trinity for any length of time.” Oppenheimer’s reply to Bainbridge’s sentiments was simple: “We do not have to explain them to anyone.”I’ve had that Bainbridge/Oppenheimer exchange in my files for a long time, but never really had a great opportunity to put it into print. To flesh out the context a little more, it came out in the wake of Lansing Lamont’s popular book, Day of Trinity (1965). Bainbridge was one of the sources Lamont had talked to, and he gave him the “sons of bitches” quote. Oppenheimer’s full reply to Bainbridge took some digs at the book:

“When Lamont’s book on Trinity came, I first showed it to Kitty; and a moment later I heard her in the most unseemly laughter. She had found the preposterous piece about the ‘obscure lines from a sonnet of Baudelaire.’ But despite this, and all else that was wrong with it, the book was worth something to me because it recalled your words. I had not remembered them, but I did and do recall them. We do not have to explain them to anyone.”The “obscure lines” was some kind of code supposedly sent by Oppenheimer to Kitty to say that the test worked. In Bainbridge’s files at the Harvard Archives there is quite a lot of material on the Lamont book from other Manhattan Project participants — most of them found a lot of fault with it on a factual basis, but admired its writing and presentation.

Bainbridge makes for a good segue into my other BAS multimedia essay, “The beginning of the Bomb,” which is about the Trinity test and which came out just before the 68th anniversary, which was two weeks ago. It also was somewhat of a reprise of themes I’d first played with on the blog, namely my post on “Trinity’s Cloud.” I’ve been struck that while Trinity was so extensively documented, the same few pictures of it and its explosion are re-used again and again. Basically, if it isn’t one of the “blobs of fire” pictures, or the Jack Aeby early-stage fireball/cloud photograph (the one used on the cover of The Making of the Atomic Bomb), then it doesn’t seem to exist. Among other things related to Trinity, I got to include two of my favorite alternative Trinity photographs.

The first is this ghostly apparition above. What a strange, occult thing the atomic bomb looks like in this view. While most photographs of the bomb are concerned about capturing it at a precise fraction of a second — a nice precursor to the famous Rapatronic photographs of the 1950s — this one does something quite different, and quite unusual. This is a long exposure photograph of several seconds of the explosion. The caption indicates (assuming I am interpreting it correctly) that it is an exposure of several seconds before the explosion and then two seconds after the beginning of the detonation. Which would explain why there are so many pre-blast details available to see.

The result is what you see here: a phantom whose resemblance to the “classic” Trinity explosion pictures is more evocative than definite. And if you view it at full size, you can just make out features of the desert floor: the cables that held up the tower, for example. (Along with some strange, blobby artifacts associated with dark room work.) I somewhat wish this was the image of “the atomic bomb” that we all had in our minds — dark, ghastly, tremendous. Instead of seeing just a moment after the atomic age began, we instead see in a single image the transition between one age and the next.

Most of the photographs of Trinity are of its first few seconds. But this one is not. It may be the only good photograph I have seen of the late-stage Trinity mushroom cloud. It is striking, is it not? A tall, dark column of smoke, lightly mushroomed at the top, with a larger cloud layer above it. “Ominous” is the word I keep coming back to, especially once you know that the cloud in question was highly radioactive.

One of the things I found while researching the behavior of mushroom clouds for the NUKEMAP3D was that while the mushroom cloud is an ubiquitous symbol of the bomb, it is specifically the early-stage mushroom cloud whose photograph gets shown repeatedly. Almost all nuclear detonation photographs are of the first 30 second or so of the explosion, when the mushroom cloud is still quite small, and usually quite bright and mushroomy. The late-stage cloud — about 4-10 minutes, depending on the yield of the bomb — is a much larger, darker, and unpleasant thing.

Why did we so quickly move from thinking of the atomic bomb as a burst of fire into a cloud of smoke? The obvious answer would be Hiroshima and Nagasaki, where we lacked the instrumentation to see the fireball, and only could see the cloud. But I’m still struck that our visions of these things are still so constrained to a few examples, a few moments in time, out of so many other possibilities, each with their own quite different visual associations.

Redactions | Visions

Castle Bravo revisited

Friday, June 21st, 2013No single nuclear weapons test did more to establish the grim realities of the thermonuclear age than Castle BRAVO. On March 1, 1954, it was the highest yield test in the United States’ highest-yield nuclear test series, exploding with a force of 15 million tons of TNT. It was also the greatest single radiological disaster in American history.

Among BRAVO’s salient points:

Among BRAVO’s salient points:

I say “almost frank” because there was some distinct lack of frankness about it. Lewis Strauss, the secrecy-prone AEC Chairman at the time and an all-around awful guy, gave some rather misleading statements about the reasons for the accident and its probable effects on the exposed native populations. His goal was reassurance, not truth. But, as with so many things in the nuclear age, the narrative got out of his control pretty quickly, and the fear of fallout was intensified whether he wanted it to be or not.

I say “almost frank” because there was some distinct lack of frankness about it. Lewis Strauss, the secrecy-prone AEC Chairman at the time and an all-around awful guy, gave some rather misleading statements about the reasons for the accident and its probable effects on the exposed native populations. His goal was reassurance, not truth. But, as with so many things in the nuclear age, the narrative got out of his control pretty quickly, and the fear of fallout was intensified whether he wanted it to be or not.

We now know that the Marshallese suffered quite a lot of long-term harm from the exposures, and that the contaminated areas were contaminated for a lot longer than the AEC guessed they would be. Some of this discrepancy comes from honest ignorance — the AEC didn’t know what they didn’t know about fallout. But a lot of it also came from a willingness to appear on top of the situation, when the AEC was anything but.

I’ve been interested in BRAVO lately because I’ve been interested in fallout. It’s no secret that I’ve been working on a big new NUKEMAP update (I expect it to go live in a month or so) and that fallout is but one of the new amazing features that I’m adding. It’s been a long-time coming, since I had originally wanted to add a fallout model a year ago, but it turned out to be a non-trivial thing to implement. It’s not hard to throw up a few scaled curves, but coming up with a model that satisfies the aesthetic needs of the general NUKEMAP user base (that is, the people who want it to look impressive but aren’t interested in the details) and also has enough technical chops so that the informed don’t just immediately dismiss it (because I care about you, too!) involved digging up some rather ancient fallout models from the Cold War (even going out to the National Library of Medicine to get one rare one in its original paper format) and converting them all to Javascript so they can run in modern web browsers. But I’m happy to say that as of yesterday, I’ve finally come up with something that I’m pleased with, and so I can now clean up my Beautiful Mind-style filing system from my office and living room.

I’ve been interested in BRAVO lately because I’ve been interested in fallout. It’s no secret that I’ve been working on a big new NUKEMAP update (I expect it to go live in a month or so) and that fallout is but one of the new amazing features that I’m adding. It’s been a long-time coming, since I had originally wanted to add a fallout model a year ago, but it turned out to be a non-trivial thing to implement. It’s not hard to throw up a few scaled curves, but coming up with a model that satisfies the aesthetic needs of the general NUKEMAP user base (that is, the people who want it to look impressive but aren’t interested in the details) and also has enough technical chops so that the informed don’t just immediately dismiss it (because I care about you, too!) involved digging up some rather ancient fallout models from the Cold War (even going out to the National Library of Medicine to get one rare one in its original paper format) and converting them all to Javascript so they can run in modern web browsers. But I’m happy to say that as of yesterday, I’ve finally come up with something that I’m pleased with, and so I can now clean up my Beautiful Mind-style filing system from my office and living room.

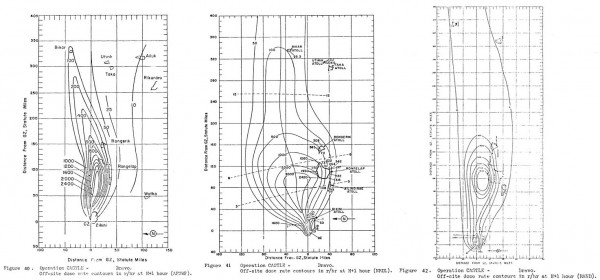

Recently I was sent a PDF of a recent report (January 2013) by the Defense Threat Reduction Information Analysis Center (DTRIAC) that looked back on the history of BRAVO. It doesn’t seem to be easily available online (though it is unclassified), so I’ve posted it here: “Castle Bravo: Fifty Years of Legend and Lore (DTRIAC SR-12-001).” I haven’t had time to read the whole thing, but skipping around has been rewarding — it takes a close look at the questions of fallout prediction, contamination, and several “myths” that have circulated since 1954. It notes that the above fallout contour plot, for example, was originally created by the USAF Air Research and Development Command (ARDC), and that “it is unfortunate that this illustration has been so widely distributed, since it is incorrect.” The plume, they explain, actually under-represents the extent of the fallout — the worst of the fallout went further and wider than in the above diagram.

Recently I was sent a PDF of a recent report (January 2013) by the Defense Threat Reduction Information Analysis Center (DTRIAC) that looked back on the history of BRAVO. It doesn’t seem to be easily available online (though it is unclassified), so I’ve posted it here: “Castle Bravo: Fifty Years of Legend and Lore (DTRIAC SR-12-001).” I haven’t had time to read the whole thing, but skipping around has been rewarding — it takes a close look at the questions of fallout prediction, contamination, and several “myths” that have circulated since 1954. It notes that the above fallout contour plot, for example, was originally created by the USAF Air Research and Development Command (ARDC), and that “it is unfortunate that this illustration has been so widely distributed, since it is incorrect.” The plume, they explain, actually under-represents the extent of the fallout — the worst of the fallout went further and wider than in the above diagram.

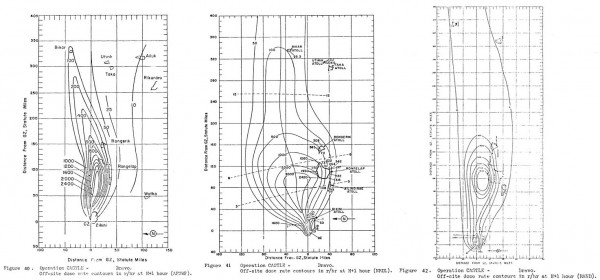

You can get a sense of the variation by looking at some of the other plots created of the BRAVO plume:

The AFSWP diagram on the left is relatively long and narrow; the NRDL one in the middle is fat and horrible. The RAND one at the right is something of a compromise. All three, though, show the fallout going further than the ADRC model — some 50-100 miles further. On the open ocean that doesn’t matter so much, but apply that to a densely populated part of the world and that’s pretty significant!

The AFSWP diagram on the left is relatively long and narrow; the NRDL one in the middle is fat and horrible. The RAND one at the right is something of a compromise. All three, though, show the fallout going further than the ADRC model — some 50-100 miles further. On the open ocean that doesn’t matter so much, but apply that to a densely populated part of the world and that’s pretty significant!

DTRIAC SR-12-001 is also kind of amazing in that it has a lot of photographs of BRAVO and the Castle series that I’d never seen before, some of which you’ll see around this post. One of my favorites is this one, of Don Ehlher (from Los Alamos) and Herbert York (from Livermore) in General Clarkson’s briefing room on March 17, 1954, with little mockups of the devices that were tested in Operation Castle:

There’s nothing classified there — the shapes of the various devices have long been declassified — but it’s still kind of amazing to see of their bombs on the table, as it were. They look like thermoses full of coffee. (The thing at far left might be a cup of coffee, for all that I can tell — unfortunately the image quality is not great.)