Max Tegmark starts at 36:14 into the above film

The Helen Caldicott Foundation Presents

Max Tegmark

Artificial Intelligence and the Risk

of Accidental Nuclear War: A Cosmic Perspective

Max Tegmark

Artificial Intelligence and the Risk

of Accidental Nuclear War: A Cosmic Perspective

Symposium: The Dynamics of Possible Nuclear Extinction

The New York Academy of Medicine, 28 February - 1 March 2015

The New York Academy of Medicine, 28 February - 1 March 2015

Introduction by Dr. Helen Caldicott The next speaker is Max Tegmark who, as I mentioned earlier, was mentioned in the Atlantic Monthly piece along with Steven Hawking. Max Tegmark has been concerned about nuclear war risk since his teens and started publishing articles about it at the age of 20. He is President of the Future Of Life Institute which aims to prevent human extinction as discussed in his popular book, Our Mathematical Universe. His scientific interests also include precision cosmology and the ultimate nature of reality. He is an MIT physics professor with more than 200 technical papers and is featured in dozens of science documentaries. His work with the Sloan Digital Sky Survey on galaxy clustering, shared the first prize in Science Magazine’s breakthrough of the year 2003. His title is “Artificial Intelligence and the Risk of Accidental Nuclear War.” Max Tegmark: | |||||||||||||||||||||

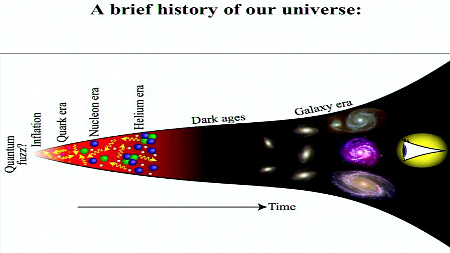

So, as you heard, I am physicist, a cosmologist; I spend much of my time studying our universe, trying to figure out what’s out there, how old it is, how big it is, how it got here. And I want to share with you my cosmic perspective. You heard from Theodore Postol here that what we’ve done with nuclear weapons is probably the dumbest thing we’ve ever done here on earth. I’m going to argue that it might also be the dumbest thing ever done in our universe.

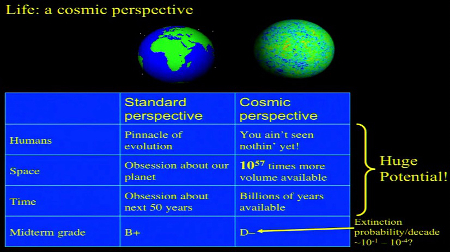

Why a D-minus? Because from a standard perspective a lot of people feel that humans are the pinnacle of evolution, this is where we got this planet, we’re limited to it. Some people are very obsessed about the next 50 years, maybe the next election cycle even. Right? So, if we wipe ourselves out in 50 years maybe it’s not such a big deal. From a cosmic perspective that is completely retarded. We ain’t seen nothing yet. It would be completely naïve, in a cosmic perspective, to think this is best, this is as good as it can possibly get. We have 10 to the power 57 times more volume at our disposal for life out there. We don’t have 50 years. We have billions and billions of years available. We have an incredible future opportunity that we stand to squander if we go extinct or in other ways screw up. People argue passionately about what the probability is that we wipe out in any given year. Some might say it’s one percent. Some might say it’s much lower—a percent of a percent. Some might say it’s higher—10 percent. Any of these numbers are just completely pathetic. If it’s one percent you expect maybe we’ll last for 100 years. That’s pretty far from the billions of years of potential we have there, right? So, come on, let’s be a little more ambitious here. Let me just summarize in one single slide why I think it’s so pathetic, how reckless we are being stewards of life. Namely this slide.

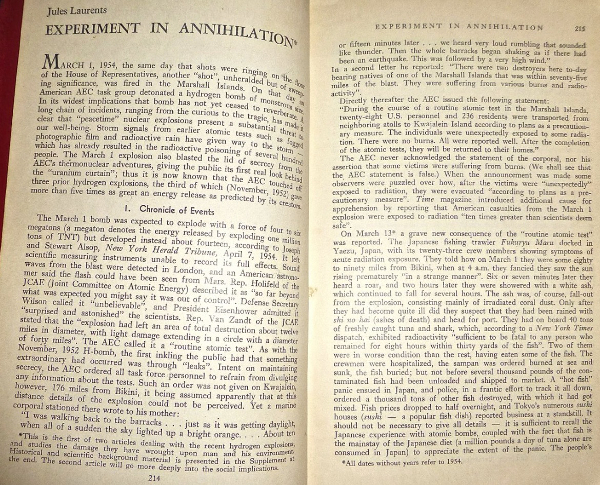

So these are some pretty screwed up priorities we have as a species. When I first became aware of this nuclear situation, and I was about 14, I was really quite shocked by how so many grownups could be so dumb. When I was 17 I felt I wanted to do whatever little things I can do for this. I was in Stockholm, Sweden, I went and volunteered to write some articles for a local magazine. I wrote a bunch of articles about nuclear weapons and nuclear war and so on. The oldest article, ever, to my knowledge about the U.S. hydrogen bomb project—which from my physicist point-of-view was when it started getting incredibly scary—is this one [“Experiment in Annihilation,” by Jules Laurents], from 1954, which, to my knowledge, for the first time really lays out what had largely been unknown to the broader public; the fact that America had just done its fourth hydrogen bomb test and that there had actually been three [earlier] ones before that.

Now this article was translated into French by Jean-Paul Sartre. It was actually read into the Congressional Record by an American politician who gave no attribution whatsoever to where he had gotten this article. And nobody knew actually. Still nobody really knows publicly, who wrote this article because Jules Laurents doesn’t exist. This was written by someone who was so worried about getting in trouble with the McCarthy folks during the time that he wrote this under a false name. So I figured in honor of this meeting I would tell you who wrote this. You will be the first to know. It was my father. Harold Shapiro wrote this article. And if anyone wants it I can e-mail you a copy.

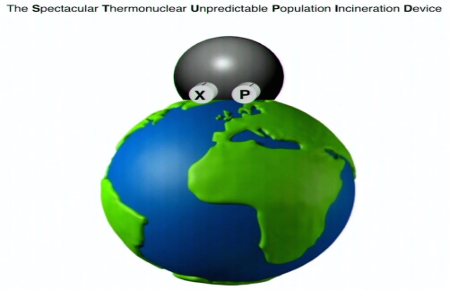

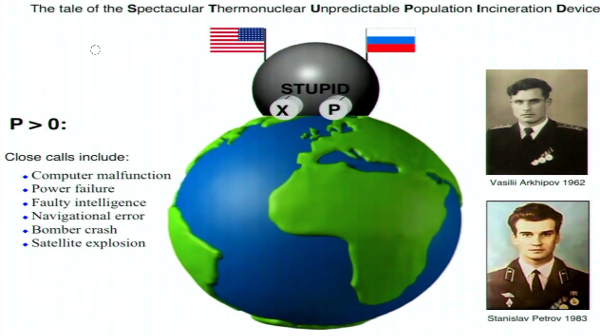

Here we are on this planet, and we humans have decided to build this device. Let’s cartoon-fashion draw it like this okay?

This device—it’s a very complicated device—it’s a bit like a Rube Goldberg machine inside. A very elaborate system. Nobody—there’s not a single person on the planet who actually understands how 100 percent of it works. Okay? But we do know some things about it. It has two knobs on the front, X and P, which I’ll explain to you shortly. And it was so complicated to build that it really took the talent and resources from more than one country, they worked really hard on it, for many, many years. And not just on the technical side—to invent the technology to be able to create what this device does. Namely, massive explosions around the planet.

And you do fake tests and in the event of people who fail to launch the missiles, you fire them, replace them. And so a lot of clever thought has gone into building STUPID. That’s what this device does. It’s kind of remarkable that we went ahead and put so much effort into building it since actually, really, there’s almost nobody on this spinning ball in space who really wants it to ever get used, who ever wants this stuff to blow up. But we’ll continue talking throughout the conference about why we humans decided—made it anyway. Let’s focus now instead a bit on how it works. What are these two knobs? The X knob determines the total explosive power that this thing brings to bear and the P knob, it determines the probability that this thing will just, BOOM!, go off in any random year for whatever reason. As we’ll see, one of the cool features of it is that it can spontaneously go off even if nobody actually wants it to. Alright? So you can tune these two knobs X and P. Let’s look a little bit at how this has evolved over time, the settings of these dials.

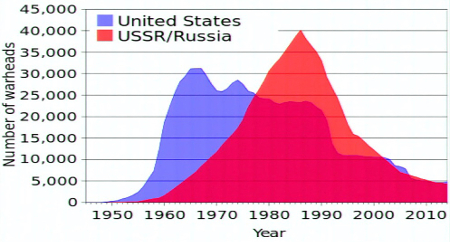

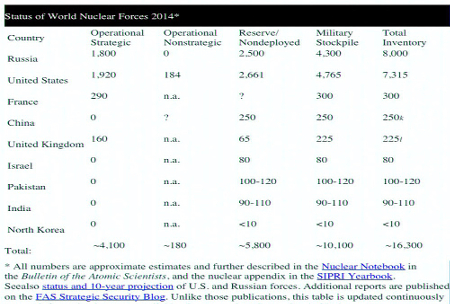

We peaked in the total, in the setting of the X knob around the mid-eighties with about 63,000 warheads. Since then, the total number of warheads, as you know, has gone down quite a bit. But sadly the drop has stopped and things haven’t gone down much at all in the last decade.

A lot of my friends, unfortunately, take the mere fact that this has gone done—this curve—is their reason to stop worrying about this. Which I think is a very bad idea.

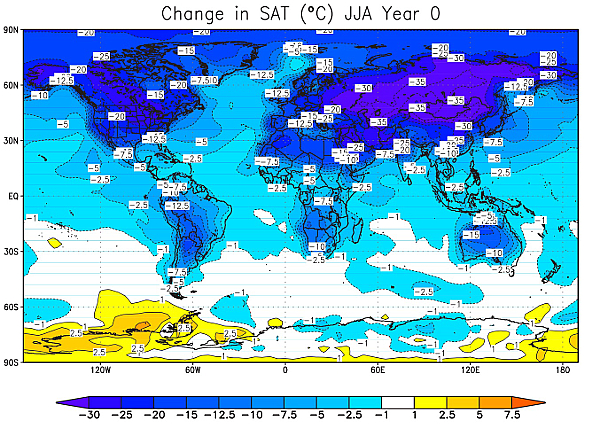

What you’re seeing here—and we’ll hear much more about it, of course, in the next talk—is simply the average surface temperature change during the two years after a global nuclear war with roughly today’s arsenals. And it’s in Celsius—so you can see, typically drop the temperature by about 20 Celsius throughout most of the American breadbasket here. And some parts in the Soviet farming areas it drops by 35 Celsius—70 degrees Fahrenheit. What does that mean in plain English? You don’t have to think very hard, you don’t have to have a great imagination to imagine that if you turn this corn field into this, you might have some impact on the world food supply.

So taking the setting of this X knob is so low now that we should stop worrying would be the ultimate naïvete in my opinion.

We know for sure—we don’t know what P is, obviously; there’s good debate about it, we should discuss it here at the meeting—but we know very rigorously it’s not zero. Because as so many of your are very well aware there have been enormous numbers of close calls caused by all sorts of things: computer malfunctions, power failure, faulty intel, navigational error, a crashing bomber, an exploding satellite, et cetera. [Eric Schlosser, Command and Control: Nuclear Weapons, the Damascus Accident, and the Illusion of Safety (New York: Penguin), 2013] And, in fact, if it weren’t for very heroic acts of certain people—we’ll talk more about, Vasili Arkhipov for example, and Stanislav Petrov—we might already have had a global nuclear war. So P is not zero. What about the change of P over time? We talked about how X has changed. How has P changed?

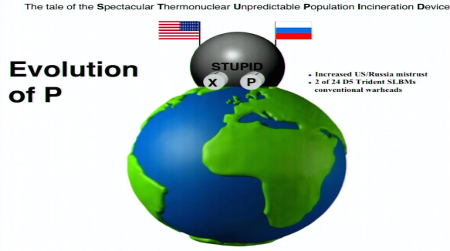

There are various reasons for this. Obviously increasing U.S.-Russian mistrust is a very bad thing and that’s certainly happening now. Then there are a lot of just random, dumb things that we do which increase P. Just one little example among many that’s been discussed is this plan to replace 2 out of the 24 submarine launched ballistic missiles on the Tridents by conventional warheads that you can fire at North Korea. A great setup for misunderstanding, since if you’re the Russians and you see this missile coming you have absolutely no way of knowing what kind of warheads it has.

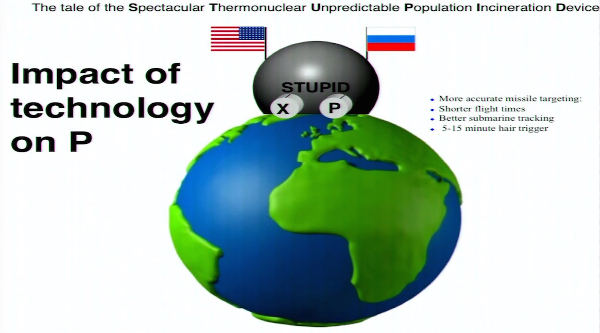

We heard from Theodore Postol already various examples of how technology is perhaps increasing the risk of accidental nuclear war. Mutual Assured Destruction worked great when missiles were accurate enough to destroy a city but not accurate enough to destroy a silo. That made it very disadvantageous to do any kind of first strike. Now, we’re seeing—thanks to early forms of artificial intelligence that are really enabling very precise targeting of missiles—you can hit very very accurately, that’s better for a first strike. Having submarine-launched ballistic missiles very close to their targets also is good for a first strike—you get less time for the enemy to react—and these very short flight times and also the better ability to track where the enemy submarines are and take them out means a lot of people are a lot jumpier, there’s very short times to decide, so both the U.S. and Russia, of course, are on hair-trigger alert—launch-on-warning where decisions, you have only 5 to 15 minutes to decide; obviously to that some things like this can increase P. What about artificial intelligence? We heard from Helen how, there’s a broad consensus that artificial intelligence is progressing very rapidly. In fact I’ve spent a lot of time—I just came back last month from a conference in Puerto Rico, that my wife and I and many of my colleagues organized where we brought together many of the top AI builders in the world to discuss the future of AI. And a lot of people felt that things that they thought were going to take 20 years to happen, 5 years ago, have already happened. There’s huge progress. Obviously, it’s very hard to forecast what will happen looking decades ahead if we get human-level AI or beyond. But we can say some things about what’s going to happen much sooner. And what’s already happening as computers get more powerful and have more and more impact on the world. For example, if you can make computer systems that are more reliable than people in properly following proper protocol, it’s an almost irresistible temptation for the military to implement them. We’ve already seen a lot of the communications and command and even analysis being computerized in the military. Now properly following proper protocol might sound like a pretty good thing until you read of the Stanislav Petrov incident. Why was it, in 1983, when he got this alarm that the U.S. was attacking the Soviet Union, that he decided not to pass this along to his superiors? He decided to not follow proper protocol. He was a person. If he had been a computer he would have followed proper protocol. And maybe something much worse would have happened. Another thing which is disturbing about computerizing things more and more is that we know that more the you de-personalize decisions, the more you take “system 1” (as Kahneman would say) out of the loop, the more likely we are to do dumb things. [Daniel Kahneman, Thinking Fast and Slow (New York: Farra, Straus and Giroux), 2013] If President Obama had a person with him who he was friends with who carried the nuclear launch codes surgically implanted next to her heart, and the only way for him to get them was to stab her to death first, that would actually make him think twice before starting a nuclear war. And it might be a good thing, right? If you take that away, if all you need to do is press a button, less inhibitions. If you have a super-advanced artificial intelligence system that you just delegate the decision to it’s even easier because you’re not actually authorizing launch. Right? You’re just delegating the authority to this system that IF something happens in the future, then please go ahead and properly follow proper protocol. Right? That worries me. Then there are bugs, right? Raise your hand if you’ve even been given the blue screen of death from your computer. Let’s hope the blue screen of death never turns into the red sky of death. This is funny if it’s just 2 hours of your presentation that got destroyed but it’s not so funny if it’s your planet. Finally, another thing which is happening as artificial intelligence systems get more and more advanced is they become more and more inscrutable black boxes where we just don’t understand what reasoning they use though we still trust them. I’m driving on my GPS, I was just last week, we were up in New Hampshire with the kids and my GPS said “Turn Left on Rufus Colby Road”. We drive down there and suddenly there’s this enormous snow bank blocking the road. I have no idea how it came to that conclusion but I trusted it. If we have a super-advanced computer system which is telling all the Russian military leadership and Putin that, ‘Yes, there is an American missile attack happening right now—here is the cool map, high-res graphic’—they might just trust it without knowing how it came to the conclusion. If it’s a human, you can ask the human, ‘How did you come to this conclusion?’ You can challenge them. You can speak the same language. It’s much harder, these days, to query a computer and clear up misunderstandings. So I’m not standing here saying We know for sure that AI is going to increase the risk of accidental nuclear war. But we certainly can’t say it won’t. And it’s very likely that it will have strong effects. This is something we need to think about. It would be naïve to think that the rise of artificial intelligence is going to have no impact on the nuclear situation.

Until I had a total u-turn. Because, we have discovered that, Yes, first of all, there are way more planets than we thought there were. But we’ve also discovered that it seems like life, advanced enough to build telescopes and technology like we have, is much more rare than you might have thought. In fact we haven’t found any evidence at all so far that there is any [life] anywhere in our observable universe, besides us. We don’t know which way it is. I argue in my book that we are probably the only life within this region of space that we have access to that’s come this far. Which, if it’s true, makes a huge responsibility. Why are all these galaxies beautiful? It’s because you see them. That’s why they’re beautiful. If we annihilate life and there’s no consciousness with telescopes they are not beautiful anymore. They’re just a giant waste of space. So what I’m saying here is that rather than look to our universe to give meaning to us. It’s we who are giving meaning to our universe. And we should really be good stewards of this.

Of course, we all love technology. Every way in which 2015 is better than the stone age is because of technology. But it’s absolutely crucial that before we just go ahead and develop technologies to be powerful also develop the wisdom to handle that technology well. Nuclear technology is the first technology powerful enough that it’s really, really driven this whole—artificial intelligence is another example of this. We, with our organization have so far spent most of our effort on things to do with AI. But we care deeply about nuclear issues as well and we have a lot of awesome people in our organization. We are very eager to hear from you ideas for how we can help make the future of life actually exist. How we can actually help all of your efforts to keep the world, to keep ourselves safe from nuclear weapons. Thank you. |

No comments:

Post a Comment